List

explanations and reflection

274 ,275 H-Index

Description

- H-Index

Medium

484810FavoriteShare

Given an array of citations (each citation is a non-negative integer) of a researcher, write a function to compute the researcher’s h-index.

According to the definition of h-index on Wikipedia: “A scientist has index h if h of his/her N papers have at least h citations each, and the other N − h papers have no more than h citations each.”

Example:

1 | Input: citations = [3,0,6,1,5] |

Note: If there are several possible values for h, the maximum one is taken as the h-index.

Explanations

That is an interesting question, and the main challenge is to understand the new conception brought in description: The H-index.

It says that, Given an array of citation numbers citi, an h-index is an number h that within the interval [0,citi.length] so that there are h paper has citations c >= h, and remaining papers has c<=h.

Analysis

First of all, this definition is way too complicated, let’s find a way to simplify it.

The beginning of problem analysis is always find a certifier. Given an number h, We examine it by iterates the whole array, and count the number of paper that has citations less or equal to h, namely $Num{under}$, and number$Num{upper}$ for papers that has citations above or equal to h. if $Num_{upper}$==h, then it is an valid H-index.

Emmm, I see, it is an NP problem! (LOL)

Okay, to be serious, now we knows how to examine the answer, and let’s talk about more different situations. For example, we could easily know that if $Num{upper}$ is smaller then h, the h must be bigger than any H-index. But what if we find $Num{upper}$ is bigger then h? Is there any way to find the right H-index?

The most naïve method is to increase h by one, and iterate the whole array to check if $Number{upper}$ decreased. If it really decreased, and currently get h=$Num{upper}$ , okay we get the right H-index. if $Num{upper}$ is still the same or it decreased but still bigger than h , we continuously decrease h, since h increases and $Num{upper}$ decreases, they would become equal eventually.

Do we really need to iterate the whole array in each increase? meh…let’s reconsider our model: Set $S{upper}$ for papers with citations >= h, and set $S{under}$for papers with citations <=h, initial h=0, so $|S_{upper}|=|citi|$ , now we have a set $Gree$. As the name, we put the paper with maximum citation among the remaining papers with citations >=h into $Gree$ each time h increases, and when there is no such paper, we say the h is the max h-index. Since each time we select the paper with max citation, we just need to sort the array in descending order and iterator over it.

Follow Up

275 says that if give you an ordered array……okay, then go for binary search, apparently.

code

1 | class Solution { |

45 jump game II

That is why I do not like leetcode hard, normally there would only be one way out, and it’s only depends on whether you have an “a-ha” moment or not.

Hard

169994FavoriteShare

Given an array of non-negative integers, you are initially positioned at the first index of the array.

Each element in the array represents your maximum jump length at that position.

Your goal is to reach the last index in the minimum number of jumps.

Example:

1 | Input: [2,3,1,1,4] |

Note:

You can assume that you can always reach the last index.

Explanations

The basic idea is straightforward, use DP to update the minimum jump number for each sub problem dp[i], and the time complexity upper bound is $O(N^2)$. Yet the data here is too large for an algorithm of this time complexity, and that is where our trick is.

Firstly, our basic idea is to update the optimal value of each position by the min jump number, e.g. for an array:[2,3,1,1,4], when we start iterating at the first position, the dp array would be like [0,inf,inf,inf,inf], and obviously if we start from index 0 to other position we would get this dp array [0,1,1,inf,inf], and then we process index 1 and the result should be [0,1,1,2,2] and we process index 2 and son on. Note that the dp recurrence formulate is

In plain English, the optimal value of an index $i$ is the minimum jumps number plus 1 of all the positions could reach to index $i$.

As you can see, the time complexity of this basic algorithm is $O(N^2)$ since in the worst case, each position would reach to all the following position, and that would be $N, N-1,N-2,…,1$ calculations, and that is $O(N^2)$.

And our goal is to optimize this update mechanism. In the update step, we update the following dp[i] linearly, and that is what we want to optimize. The most brute-force way is to use an segment tree, which could make range update in $O(logn)$. So the final time complexity of this problem would be $O(nlogn)$, and it would be painful to write a segment tree by yourself in an interview without a template.

Other solutions, which only works for this problem, is to make an “lazy update”. we maintain an interval where the minimum jump number in the interval is equal or below an certain number $C$. We continuously put new element into that interval and update $C$ lazily, and immediately stop when the last position is included into the interval.

11. Container With Most Water

Medium

4434496FavoriteShare

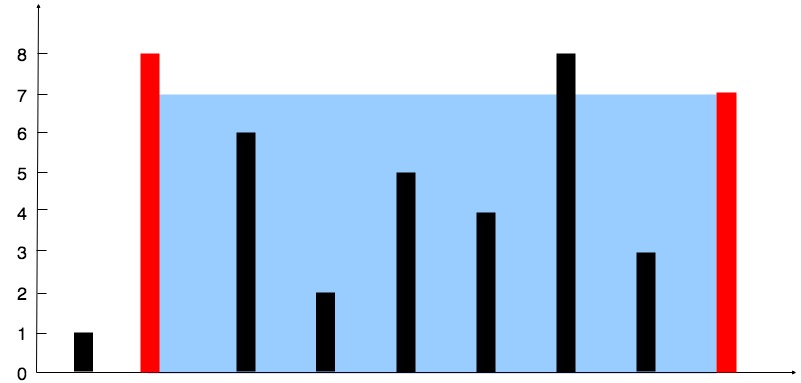

Given n non-negative integers a1, a2, …, an , where each represents a point at coordinate (i, ai). n vertical lines are drawn such that the two endpoints of line i is at (i, ai) and (i, 0). Find two lines, which together with x-axis forms a container, such that the container contains the most water.

Note: You may not slant the container and n is at least 2.

The above vertical lines are represented by array [1,8,6,2,5,4,8,3,7]. In this case, the max area of water (blue section) the container can contain is 49.

Example:

1 | Input: [1,8,6,2,5,4,8,3,7] |

Explanations

TBH, I am quite confused at the beginning since I just dig deep in DP and Greedy, and only found that those do not work. After checked the optimal solutions for this problem, I found that I am not good at two pointers and decide to design a kind of analysis pattern for two pointer problem.

First of all, I claim that, the essence of two pointer method is to eliminate candidate optimal solutions in the solution space. In other word, you prove that your solution is better than some other solutions in the solution space, so you could save time of checking whether they are optimal solution or not, for you have checked a better solution( which is, the current solution.)

Secondly, there are two kinds of two pointers method, the first is to set both the head and rear pointers at the first element in the array(or the last, basically the same), and another is to set the head pointer at the first element and the rear pointer at the last element and “shrink” the interval formed by the head pointer and the rear pointer.

Therefore, maybe we could just try both two method and see whether we could find a way to eliminate the candidate solution. In other words, try to prove that the current solution is better than some other unchecked solutions in the solution space.

334 Increasing Triplet Subsequence

Medium

Given an unsorted array return whether an increasing subsequence of length 3 exists or not in the array.

Formally the function should:

Return true if there exists i, j, k

such that arr[i] < arr[j] < arr[k] given 0 ≤ i < j < k ≤ n-1 else return false.

Note: Your algorithm should run in O(n) time complexity and O(1) space complexity.

Example 1:

1 | Input: [1,2,3,4,5] |

Example 2:

1 | Input: [5,4,3,2,1] |

explanations

it’s very intuitive to come up with a brute force algorithm where you record the number of previous smaller element for each element in the array, like [1,2,3,4,5]=>[0,1,2,3,4]. Based on that, we notice that we only care about there is such 3 elements i,j,k that arr[i] < arr[j] < arr[k] given i<j<k. In other words, we care about whether there is a element that has a previous smaller element that has a previous smaller element.

Back to the brute force algorithm, and we name the mapped array [0,1,2,3,4] as previousSmallerArray(PSA as abbreviation ). We observe it, and we guess, if there is an element bigger than 2 in PSA, then we could found the subsequence we want. However, it is not guaranteed for the counter example [2,1,3]=>[0,0,2] and there is not a valid subsequence for this question.

Ok, now we found that the most obvious idea is wrong, then what should we do next? Aare we in the totally wrong direction? Maybe we just need some small modification. Look back at the definition, we could see that the middle index, j, is the critical point: it takes the role linking i and k, and what is the cost of finding all the j? Well, it should take $O(n^2)$ to find a valid pair of $i,j$, but it would cost linear time to find if an index could be a $j$: Start iterating from beginning and use a variable $Min$ to record the current minimum number. In each iteration, try to compare variable $Min$ and current value, if the element is bigger than the $Min$, the index of this element should be a candidate $j$.

So this is an interesting part of algorithm, finding an exact pair and finding the existence of a pair is quit different, and the latter could be done in linear time.

Things become clear now, and we just use the same strategy to iterator the array again, but at this time, we aim for $k$, and a valid $k$ should has a prior $j$ that $arr[j]<arr[k]$, and it could also be completed in linear time by recording a minimum $arr[j]$.

Code

1 | class Solution { |